A star-studded multicenter workflow study evaluated AI DeepSeeNet across 24 clinicians and three image datasets.

Artificial intelligence may not blink, but it is learning to see.

In a diagnostic study published this month in JAMA Network Open, a world-class group of researchers and ophthalmic leaders found that AI assistance improved both the accuracy and efficiency of clinicians diagnosing age-related macular degeneration (AMD), one of the leading causes of vision loss worldwide.

The study was led by an international group of experts from Yale University, the National Eye Institute (NEI) and Duke-NUS Medical School, including Dr. Tiarnán Keenan (United States), Dr. Emily Chew (United States), Prof. Gemmy Cheung (Singapore) and NEI Director Dr. Michael Chiang (United States). Together, they explored how AI might support everyday clinical practice, particularly when diagnosing AMD.

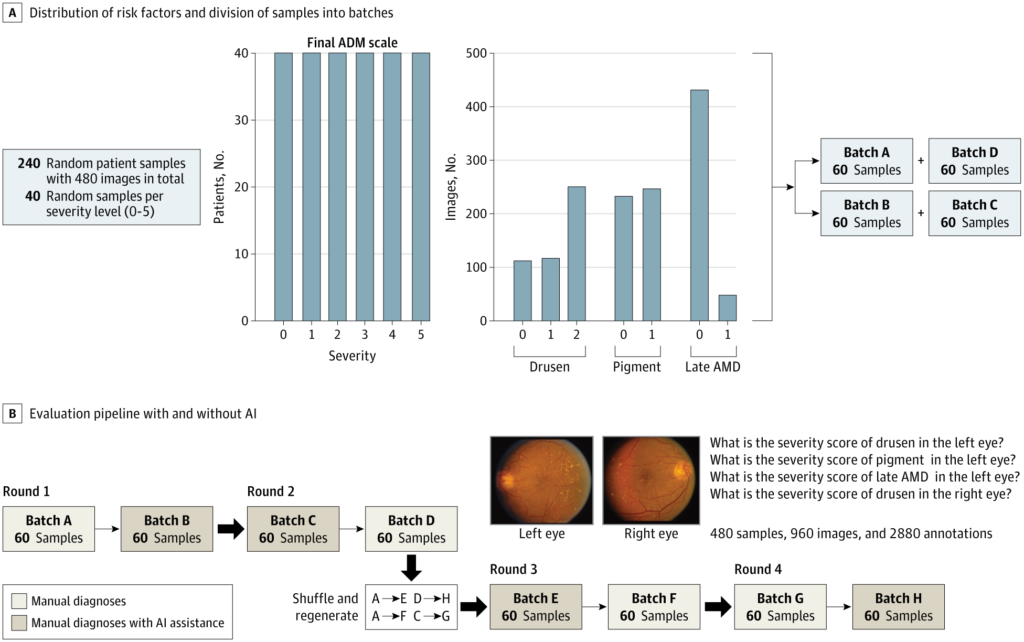

24 ophthalmologists examined 960 fundus images from 240 patients ver four randomized rounds using a deep learning model known as DeepSeeNet.1

But this AI, a publicly available model trained on more than 60,000 fundus images called DeepSeeNet, wasn’t there to replace the human eye; it was there to stand beside it and point out what might otherwise be missed.

READ MORE: AI in Ophthalmology: Maximizing Potential while Ensuring Data Safety – PIE

Structured to reflect real-world clinical rhythm

Many AI models perform well in controlled conditions, but few are tested in the unpredictable currents of real-world clinics. This study aimed to bridge that divide with a multi-round diagnostic workflow designed to evaluate AI’s role in clinician decision-making.

Researchers deployed DeepSeeNet to assist clinicians in grading AMD severity using the Age-Related Eye Disease Study (AREDS) simplified scale. This included drusen size, pigmentary abnormalities and presence of late AMD in each eye.

The dataset included 240 patient cases and 960 images, divided into four randomized batches. Clinicians alternated between manual and AI-assisted diagnosis over four rounds, with a one-month washout and image re-randomization to minimize recall bias.

Participants included 13 retina specialists and 11 general ophthalmologists from 12 institutions. Each completed both assessment types, producing 2,880 annotations: some guided by clinical experience, others by the algorithm’s suggestion.

A lift in accuracy, measured in F1 scores

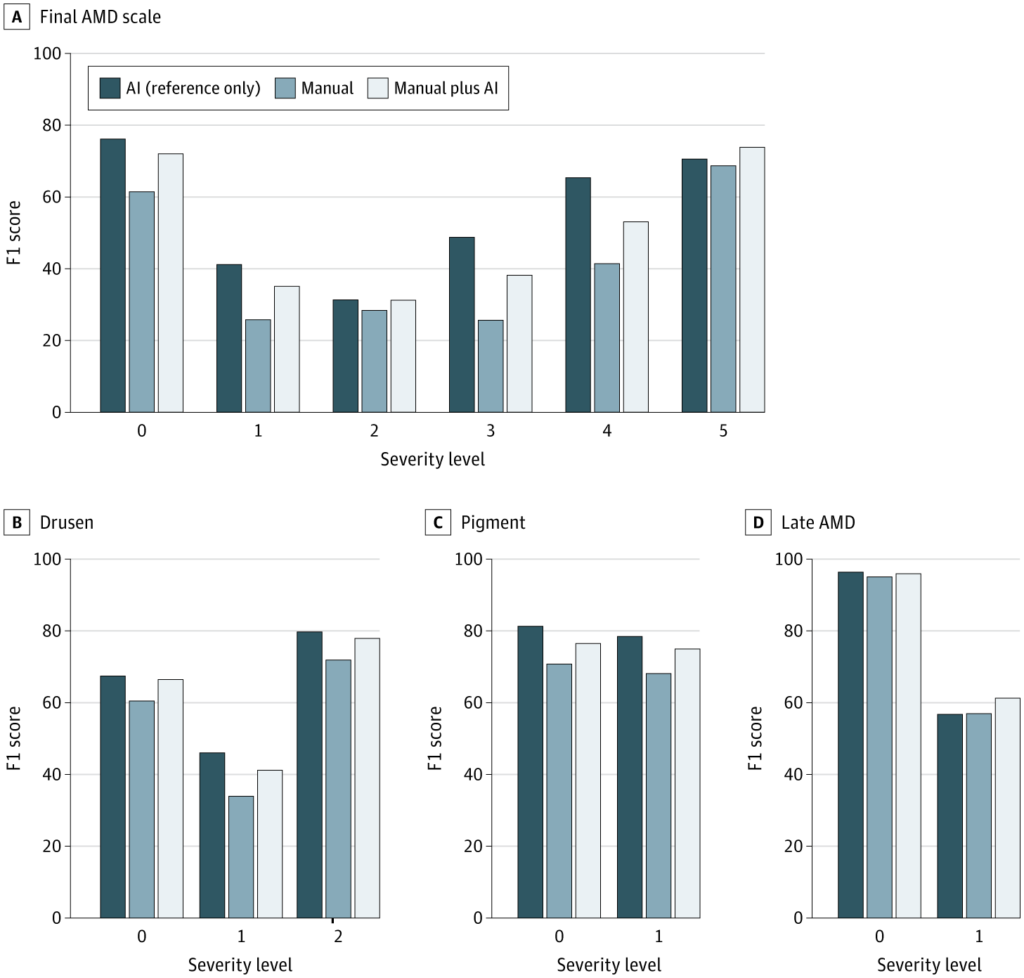

Results showed that AI assistance measurably boosted diagnostic precision. Using F1 scores to balance sensitivity and specificity, the mean accuracy for overall AMD severity classification improved from 37.71 to 45.52 when clinicians had access to AI support.

This improvement reached statistical significance (P < .001), and 23 of 24 clinicians recorded gains in performance. Analyzing the individual risk features:

- Drusen size: F1 score rose from 59.83 to 66.22

- Pigmentary abnormalities: 68.11 to 75.00

- Late AMD: 56.98 to 61.26 (not statistically significant)

Performance improvements varied by clinician, with relative gains in F1 scores ranging from 5.11% to 57.41%. One clinician recorded a marginal decrease of 0.26% between manual and AI-assisted grading.

AI as co-pilot or solo navigator?

While the clinician-AI partnership generally outperformed unaided clinicians, AI alone often proved to be the sharper diagnostician. For overall AMD severity, the model’s solo performance exceeded that of the human-AI duo in 19 out of 24 cases. A similar trend was seen for drusen and pigment grading.

Late AMD, however, told a different story. In this task, the human touch won out, with 18 of 24 clinicians outperforming AI alone. This shift may reflect the nuanced interpretation needed for advanced disease, where the shadows of clinical judgment still stretch farther than the model’s reach.

Faster decisions, fewer second

Time is currency in the modern clinic, and AI appeared to save some. Among the 19 clinicians who had complete timing data, average diagnostic time in the first round was 39.8 seconds per case without AI. With AI support, that time dropped by 10.3 seconds (P < .001).

Even as clinicians became faster through repetition in later rounds, AI assistance continued to confer a time advantage. Across rounds two through four, the AI arm remained 6.9 to 8.6 seconds faster (P = .01 to .04). The data suggest that AI is not only a second set of eyes, but a quicker one too.

READ MORE: New Study Demonstrates AI’s Reliability in Home OCT Analysis for nAMD – PIE

Refining the model

The researchers enhanced the original DeepSeeNet model by training it on more than 39,000 additional images from the AREDS2 study, producing an upgraded version: DeepSeeNet+. The new model was tested on three datasets:

- AREDS2 (intermediate/late AMD): F1 score improved from 0.5162 to 0.6395

- SEED (multiethnic cohort, Singapore): 0.3895 to 0.5243

- AREDS (original test set): no significant change (0.4755 to 0.4793)

Performance gains in AREDS2 and SEED highlight the benefits of broader training data. SEED also confirmed the model’s ability to generalize across diverse populations beyond U.S. cohorts.

Notes from the diagnostic frontier

The findings suggest that AI tools like DeepSeeNet can enhance diagnostic consistency and speed when integrated into AMD workflows. Yet pairing human judgment with AI does not always outperform the model alone. In some cases, the algorithm led, while in others, clinical instinct filled the gaps.

The authors noted that further progress will require ongoing training, external validation and transparency. They made their models publicly available on GitHub for ongoing development.

In the end, DeepSeeNet did more than process pixels. It prompted an important conversation about how ophthalmologists and algorithms can co-exist in the diagnostic journey in service of the patient.

Editor’s Note: Read the full investigation, AI Workflow, External Validation, and Development in Eye Disease Diagnosis from JAMA Network Open.

References

- Peng Y, Dharssi S, Chen Q, et al. DeepSeeNet: a deep learning model for automated classification of patient-based age-related macular degeneration severity from color fundus photographs. Ophthalmology. 2019;126(4):565–575.

- Majithia S, Tham YC, Chee ML, et al. Cohort profile: the Singapore Epidemiology of Eye Diseases Study (SEED). Int J Epidemiol. 2021;50(1):41–52.

This content is intended exclusively for healthcare professionals. It is not intended for the general public. Products or therapies discussed may not be registered or approved in all jurisdictions, including Singapore.